AI's biggest regulatory blind spot

We're building powerful tools, but the rulebook is still being written. Here's how to stay ahead.

HT4LL-20250902

Hey there,

Waiting for regulators to hand us a perfect AI roadmap is the single biggest mistake we can make right now.

The promise of AI to revolutionize clinical trials is undeniable—faster recruitment, better risk assessment, non-invasive monitoring. Yet, every step forward feels like a step into a regulatory fog. We're grappling with "black box" models, the very real risk of AI hallucinations compromising patient safety, and the fundamental challenge of proving AI's value in a way that will satisfy the FDA or EMA. This uncertainty doesn't just slow down innovation; it creates a tangible risk for our entire R&D pipeline.

So, how do we move forward with confidence when the path isn't fully paved? Today, we’re going to talk about:

How to build an internal framework that proves AI's value and safety.

Why human oversight is non-negotiable for mitigating risks like hallucination.

What it takes to move from a reactive to a proactive regulatory strategy.

If you’re an R&D leader trying to balance groundbreaking innovation with the pragmatic need for regulatory approval, then here are the resources you need to dig into to build a future-proof AI strategy:

Weekly Resource List:

Artificial intelligence and clinical trials: a framework for effective adoption (8 min read)

Summary: This article argues that the biggest barrier to AI adoption in clinical trials isn't the technology itself, but the lack of a standardized way to measure its value. It calls for a value-based framework, developed with patient input, to quantify AI's impact beyond simple cost savings, ensuring we can prove its worth to stakeholders and regulators.

Key Takeaways: You must lead the charge in defining how to measure AI’s value, focusing on trial informativeness and patient-centric outcomes, not just operational efficiency. Proactively creating these metrics internally will give you a massive head start in regulatory conversations.

A scoping review of artificial intelligence applications in clinical trial risk assessment (30 min read)

Summary: A deep dive into 142 studies reveals that while AI is increasingly used for risk assessment, many models are built on biased, retrospective data and evaluated with potentially misleading metrics. The authors stress the need for higher quality data, more robust evaluation, and integrated models that assess risk holistically.

Key Takeaways: Your AI is only as good as your data. It's critical to invest in curating diverse, high-quality datasets and mandate the use of stronger evaluation metrics (like F1-score or MCC) to truly understand your model's performance and present a credible case to regulators.

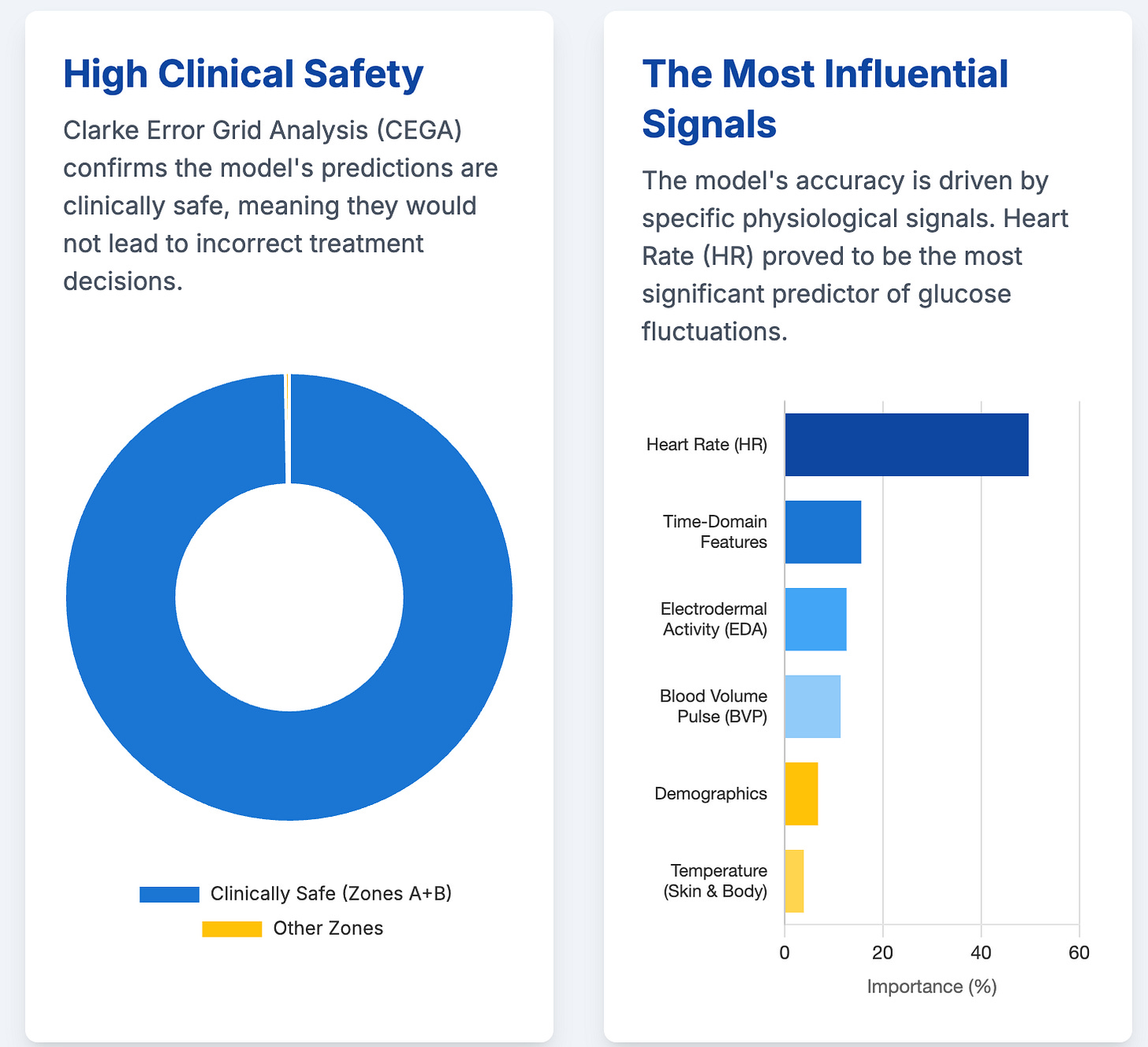

Digital biomarkers for interstitial glucose prediction in healthy individuals using wearables and machine learning (33 min read)

Summary: This study showcases the power of using wearable sensor data and ML to predict glucose levels non-invasively, a huge step forward for digital biomarkers. However, its success in a small, healthy cohort highlights the major hurdle ahead: validating these tools across diverse, real-world patient populations.

Key Takeaways: The future involves non-invasive monitoring, but regulatory approval will hinge entirely on generalizability. Prioritize funding extensive validation studies in varied populations and incorporate explainable AI (XAI) to build the clinical trust necessary for adoption.

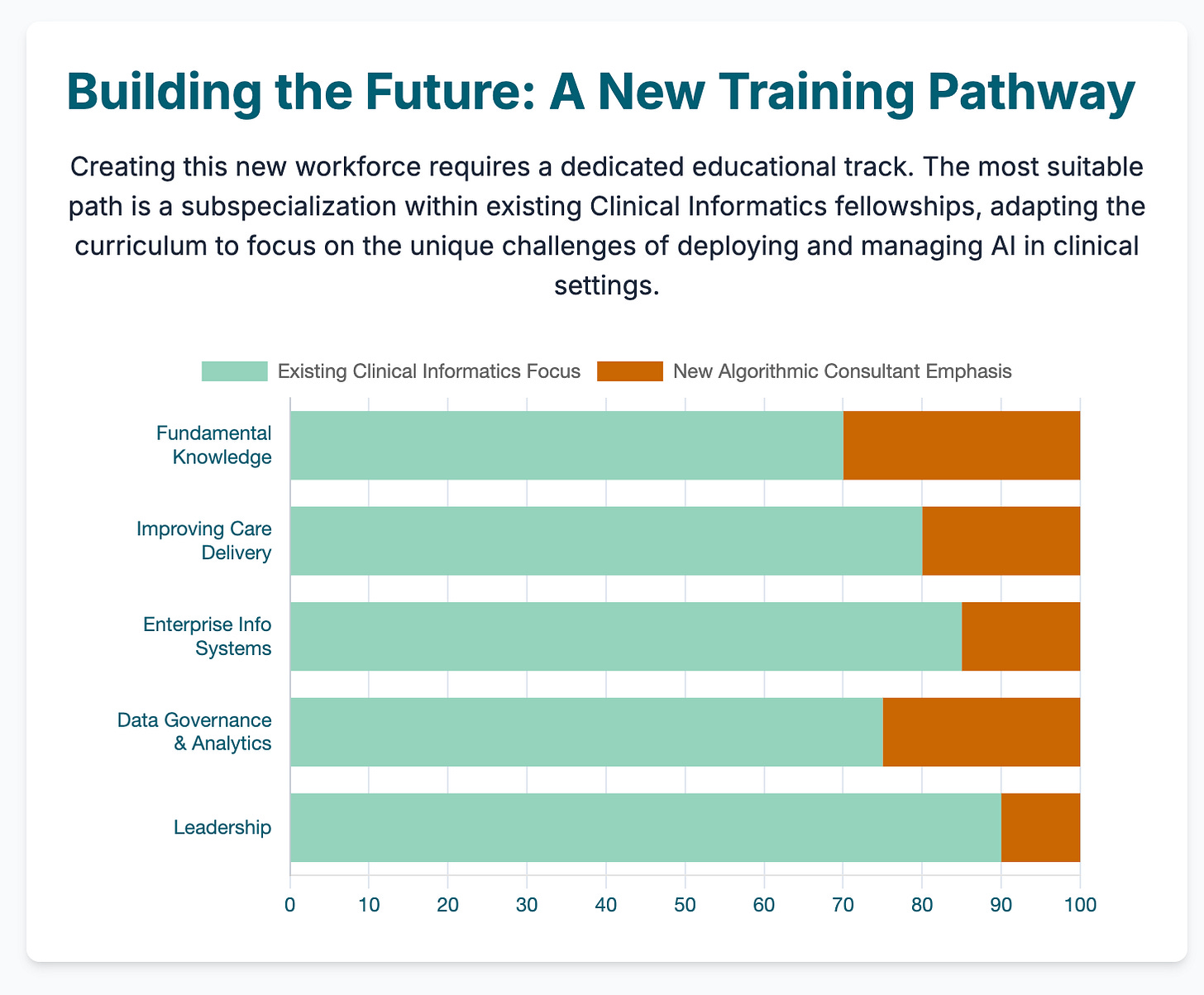

The algorithmic consultant: a new era of clinical AI calls for a new workforce of physician-algorithm specialists (14 min read)

Summary: This piece proposes a new specialist role—the "algorithmic consultant"—to bridge the gap between complex AI tools and clinicians. Arguing that direct physician-AI interaction is often flawed, this expert would oversee AI selection, interpretation, and governance, ensuring safe and ethical deployment.

Key Takeaways: Stop designing AI tools just for physicians. Anticipate the need for expert intermediaries. Your AI solutions should have interfaces and auditable features built for these specialists, which will de-risk adoption for health systems and simplify liability concerns.

Multi-model assurance analysis showing large language models are highly vulnerable to adversarial hallucination attacks during clinical decision support (20 min read)

Summary: This sobering study reveals that even sophisticated LLMs have an alarmingly high hallucination rate (up to 82%) when fed fabricated clinical details. Simple fixes like prompt engineering help but don't solve the problem, posing a significant risk to patient safety.

Key Takeaways: The risk of AI hallucination is not a theoretical problem; it's a clear and present danger. You must build rigorous, multi-layered validation pipelines with human-in-the-loop oversight to catch these errors. Documenting this process is non-negotiable for proving safety to regulators.

3 Pillars for Building a Regulatory-Ready AI Strategy (Even When the Rules Aren't Clear)

In order to confidently deploy AI in our clinical trials and get ahead of regulatory scrutiny, we need to shift our thinking from compliance to strategic preparation.

Here’s how to build a robust foundation for your AI initiatives that will stand up to the inevitable questions from regulators.

1. Build an Ironclad Evidence Framework

The first thing you need is a rigorous internal process for proving that your AI tools are not just efficient, but safe and effective. This goes far beyond a simple accuracy score.

You need to establish a value-based framework that quantifies AI's impact on trial informativeness and patient outcomes, not just cost-minimization. This means adopting more robust evaluation metrics (like F1-score or Matthew’s Correlation Coefficient) that are suited for complex, imbalanced clinical data. Most importantly, this framework must include adversarial testing to actively find and measure your model’s breaking points, especially for risks like LLM hallucinations. Think of it as building a comprehensive regulatory dossier before you're ever asked for one.

2. Design for Expert Human Oversight

The future of clinical AI isn't a world without doctors; it's a world with new kinds of experts. The idea that we can create a "black box" so perfect that any clinician can use it flawlessly is a fantasy.

Instead, we must design our AI systems for a new specialist: the "algorithmic consultant." This is a physician-data science hybrid who can interpret, validate, and manage AI tools at the point of care. This "human-in-the-loop" model is our most reliable safety net. It acknowledges AI's inherent limitations and creates a system of checks and balances that regulators will find far more compelling than any claim of algorithmic perfection.

3. Shift from Passive Compliance to Proactive Engagement

Finally, you cannot afford to sit back and wait for the regulatory landscape to solidify. By the time the final rules are written, you'll already be behind.

Leaders in this space have an opportunity to help write the rules. This means actively engaging with regulatory bodies like the FDA and EMA. Share your internal validation methodologies and data on AI performance and safety. Participate in industry consortiums to help establish standards for value assessment and risk mitigation. By demonstrating a deep commitment to transparency and evidence-based innovation, you can help shape a regulatory future that is both rigorous and supportive, rather than one that is reactive and stifling.

Leading transformation with AI at work first starts with you. Want to truly take advantage of the GenAI tools and increase your productivity? Sign up to beta test my GenAI Personal Compass Assessment and get a roadmap to upskill yourself.

PS...If you're enjoying Healthtech for Lifescience Leaders, please consider referring this edition to a friend.

And whenever you are ready, schedule time to get a free advisory consultation.