Why most AI pilots fail to scale (and how to fix it)

It’s not the algorithm. It’s the workflow.

HT4LL-20251125

Hey there,

We spend too much time obsessing over the accuracy of an algorithm and not enough time obsessing over whether a human will actually use it.

You have likely seen the “flash in the pan” scenario before: a digital health pilot that looks incredible on a quarterly review slide but crumbles the moment you try to roll it out to five different clinical sites. The issue usually isn’t the technology itself. It’s the friction—clinicians ignoring “black box” outputs, infrastructure that creates dual data entry, or tools that work in a pristine lab but fail in a clinic with spotty Wi-Fi. This disconnect slows down your trials, frustrates your site investigators, and keeps your organization stuck in “pilot purgatory.”

We are shifting gears today to look at scalability.

How to build trust so clinicians actually use the tools.

Why infrastructure (even offline modes) matters more than features.

How to move from siloed data to multimodal intelligence.

Let’s dive in.

If you are looking to move your organization from tentative experiments to robust, company-wide AI adoption, then here are the resources you need to dig into to build resilient systems:

Weekly Resource List:

Digital Detection of Dementia in Primary Care (8 min read)

Summary: A randomized clinical trial compared a “usual care” approach against AI screening alone and AI combined with patient input. It highlighted that while passive digital markers alone were ignored by clinicians, adding a patient-reported outcome created a “check and balance” system that validated the data.

Key Takeaway: The “AI only” approach failed. The combined approach increased diagnoses by 31%. To scale AI, you must embed mechanisms (like patient-reported outcomes) that build clinician trust in the machine’s output.

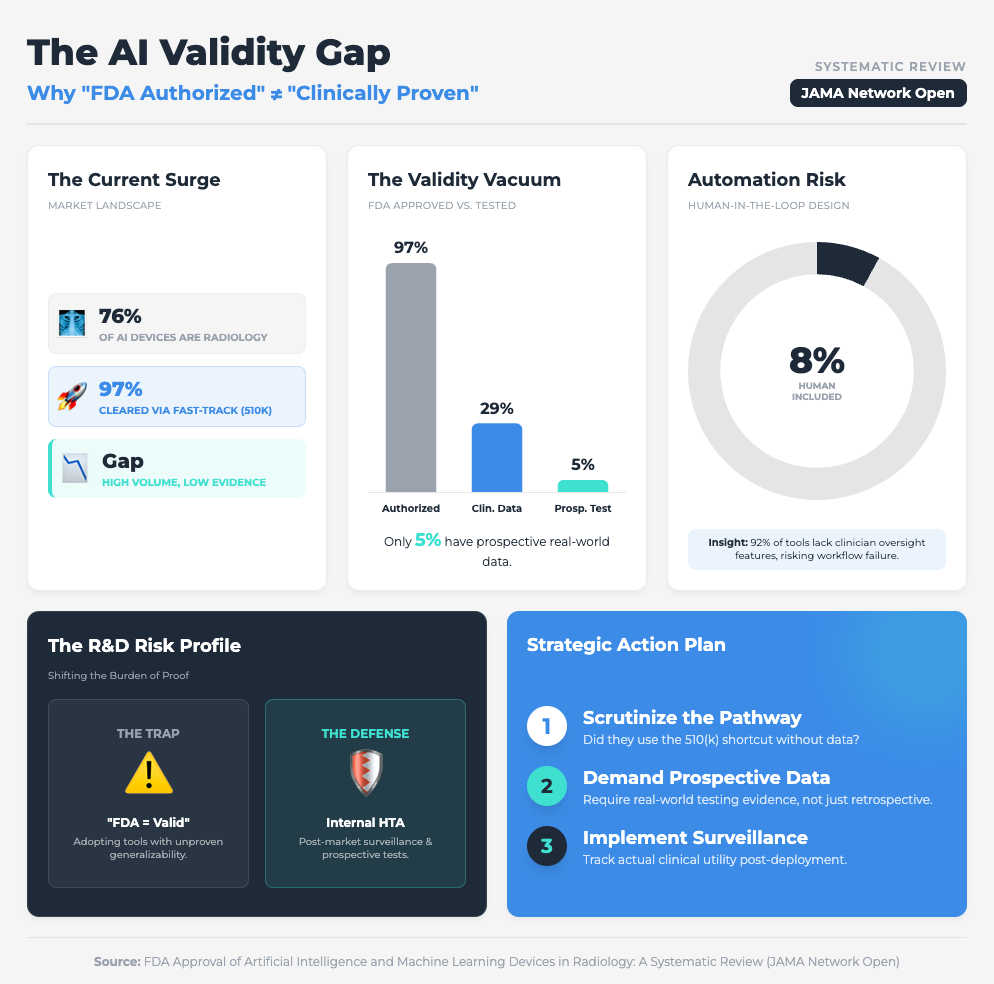

FDA Approval of AI/ML Devices in Radiology (4 min read)

Summary: A systematic review found that 76% of FDA-cleared AI devices utilized the 510(k) pathway, a route that often bypasses rigorous, independent clinical data requirements. With only 8% of these devices including a human-in-the-loop in testing, there is a significant gap between regulatory clearance and proven clinical generalizability.

Key Takeaway: Don’t conflate regulatory clearance with clinical utility. To ensure scalability, demand prospective testing from your vendors to prove the tool works in the real world, not just on a test dataset.

Five Ways AI Is Transforming Cancer Care (7 min read)

Summary: This briefing outlines how AI is drastically speeding up recruitment (up to 3x faster) but simultaneously overwhelming doctors with “tool fatigue” and “excess” dashboarding. The report emphasizes that while AI can identify up to 25% more eligible patients, it must be integrated into existing workflows to avoid increasing the administrative burden on oncologists.

Key Takeaway: Scalability dies when you add workload. Successful implementation requires integrated tools that demonstrably save hours on documentation, rather than just adding another dashboard to check.

Enablers and Challenges from a Digital Health Intervention (10 min read)

Summary: An analysis of a health app in resource-constrained settings (Afghanistan) highlighted how infrastructure flaws can derail even the most well-intentioned digital interventions. High staff turnover and the requirement for dual data entry (paper and digital) were identified as major friction points that fueled resistance and threatened sustainability.

Key Takeaway: If your clinical trial sites have unstable internet, cloud-only AI will fail. Mandating “offline-first” capabilities and avoiding dual data entry are non-negotiable for global scalability.

The AI Revolution: Multimodal Intelligence in Oncology (15 min read)

Summary: Multimodal AI (MMAI) integrates heterogeneous datasets—including imaging, genomics, and clinical records—to significantly outperform single-mode models in predictive accuracy. This approach not only improves prognosis accuracy but also enables the use of “digital twins” for synthetic control arms, potentially reducing the need for large control groups in trials.

Key Takeaway: The future of R&D is MMAI and synthetic control arms. However, you cannot scale this without radical interoperability (standards like FHIR and OMOP) to break down data silos.

3 Steps To Build Scalable AI Solutions With Lasting Impact Even if You Have Skeptical Stakeholders

In order to achieve faster clinical trials and high-quality data, you’re going to need a handful of things: a focus on workflow, rigorous validation, and infrastructure resilience.

Let’s break down how to move from “cool pilot” to “standard of care.”

1. Engineer Trust into the Workflow

The first thing you need is to stop treating AI as a standalone answer and start treating it as a “second opinion” that needs validation.

Why? Because the JAMA study on dementia showed us that passive AI alone was ineffective. It was only when they combined the AI with a simple Patient-Reported Outcome (PRO) that diagnoses jumped by 31%. When the patient provided input first, the clinician trusted the subsequent AI flag more.

What you need to do:

Don’t just drop an algorithm into your clinical trial sites. Pair it with a human element—like a brief PRO or a validation step—that engages the user. This “human-in-the-loop” design isn’t a bug; it’s a feature that mitigates the “black box” fear and drives adoption.

2. Stress-Test for the “Real World” (Not Just the Lab)

Next, you need to ruthlessly audit the validation data of your AI partners and the infrastructure of your sites.

We know that 97% of FDA-authorized AI devices are cleared via the 510(k) pathway, which often skips prospective clinical testing. Furthermore, as seen in the Sehatmandi app study, if a tool requires internet to function, it will fail in resource-constrained sites.

What you need to do:

The Vendor Audit: Ask your AI vendors: “Show me the prospective clinical data,” not just their retrospective accuracy scores.

The Infrastructure Rule: Mandate offline capabilities for any digital data collection tool used in global trials. If the Wi-Fi cuts out and the app crashes, you lose data. If it queues data locally and uploads later, you win.

3. Demand Radical Interoperability

Finally, you need to break down the walls between your data types to leverage Multimodal AI (MMAI).

You cannot explore product differentiation or synthetic control arms if your imaging data sits in one server and your omics data sits in another. MMAI allows for “digital twins” and highly precise patient stratification, but it requires data to speak the same language.

What you need to do:

Stop building parallel systems. Require that new AI tools integrate with existing Health Management Information Systems (HMIS) or Electronic Health Records (EHR). Specifically, push for standards like FHIR and OMOP in your contracts. This ensures that as your company grows, your AI can ingest heterogeneous data to create the high-quality insights necessary for regulatory approvals.

PS...If you're enjoying Healthtech for Lifescience Leaders, please consider referring this edition to a friend.

And whenever you are ready, there are 2 ways I can help you:

AI Roadmap Kickstart Guide: Download my free guide on the 7 critical questions every pharma leader must answer before launching an AI initiative.

Strategy Session:Book a complimentary 30-minute AI Strategy Session with me to diagnose the biggest opportunities for AI within your current R&D pipeline.